The big deal about NVMe is twofold. First, it operates on the PCIe bus, which means it cuts out the SATA controller entirely. That leads to less latency, larger bandwidth and less CPU overhead during some tasks. Important to note that these things may affect your perceived performance, but they very well may not. Don’t assume bigger number == better. If you’re not saturating what you have, more isn’t getting you anything. Again, big numbers don’t always translate into seconds saved, which is really how we perceive performance.

Second is parallelism, simply the ability to do more things at once. SATA has a command queue that lines up processes to happen on the drive, and can hold up to 32 commands. NVMe has a theoretical limit of 65,536 (256^2) queues with 65,536 commands each. Now, before you fawn over that ridiculously large number, consider how quickly these drives are operating (thus how quickly a single command get executed) and how much you’d have to be asking of it to even exhaust that 32 command queue. You’re likely going to run out of cpu or ram before you tax a basic SSD drive in home scenarios.

There’s two legit cases where NVMe excels. Extremely large databases with a metric fuckton of concurrent users/processes (think Amazon, Google, Wall Street, the NSA, etc) and virtualization. For Luddites, that’s when you use a computer to emulate several smaller computers - every time you log onto, say, a bank app on your phone, the computer at the bank creates a little private space with it’s own version of the banking app, lets you do your thing, and then nukes it out of existence. Given how much is constantly happening in user spaces like that across the internet, you can see how a drive that can do a shitload of things at once would be valuable.

Where it’s not valuable is browsing the web, playing games or recording music, because none of those things are terribly demanding on the drive and so neither the software or the OS are set up to utilize that added queue depth. Anyone that sees a large benefit to upgrading to NVMe over SATA is either not taking into account something else that’s changed, has a bizarre edge case that they’re not explaining, or has a bad case of confirmation bias.

In the early days of SSDs, the difference between Pro and normal SSDs tended to be about both speed and longevity. The introduction of 3-D NAND chips and better fabrication has mostly done away with that, and now they just charge you more for more warranty, which is fine if you want to pay it.

So lets break it down:

https://imgur.com/zJOMoN2

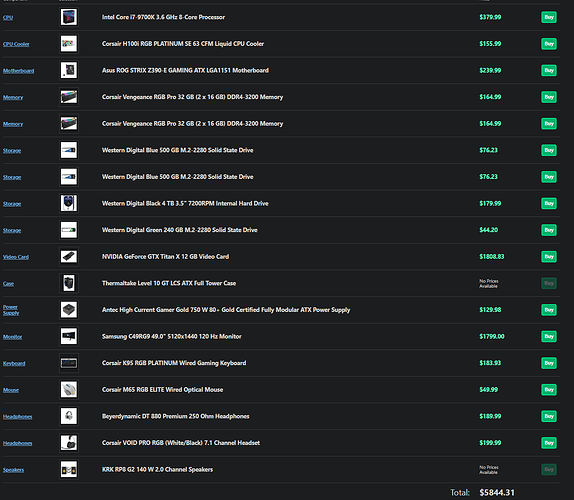

That’s the pertinent info. NVMe blows the SATA ones out of the water on Sequential Read/Write, which isn’t particularly useful and is a datapoint artifact from the days of spinning drives. All it tells you now is the theoretical max transfer, which you might approach when copying a single huge file.

QD1 vs QD32: that’s Queue Depth, that stack of instructions. They don’t list QD65536 because anyone concerned with that is talking directly to the manufacturer or their storage provider. You don’t care about QD32, you’re concerned with QD1, which is where a drive will be in 99% of home use.

QD1 Random Read/Write: this gives a good indication on how drives stack up in everyday use. You’ll notice they’re pretty damn close. By comparison, a 10k HDD has around 180 IOPS (that’s input/output per second) That ridiculous difference is why you see a massive speed increase moving from HDD to SSD, and why you probably shouldn’t care about a difference of 1000 iops between drives.

MTBF: Mean Time Between Failure, meaning how long you can expect the thing to last on average under standard conditions. You’ll notice that both the Pro and NVMe are no more reliable than the cheap one. For people who make their living on storage, MTBF is both a useful eyeball estimate and a waste of time for a lot of complicated reasons. For people that have single digits of drives in their home, plan on getting 4-5 years, anything longer is borrowed time.