Disclaimer: Always take what I have to say with a grain of salt, and then chuck that into the ocean to dissolve!

@Manton

Let’s see if we can all possibly come up with some solutions, and bare in mind some of what I am going to suggest, I haven’t put into practice just yet.

Lurv Canva, but haven’t used it in ages, and when i saw it as part of Playground, I thought that would be neat to play with. Started using it for a bit, and had to shut it down to go work on my primary form of art, music! While I do firmly believe we should be able to utilize Canva + Playground to do what you want to accomplish, I’ve also read from various internet rants that it isn’t possible in that service, nor is it possible in Midjourney. Some have cited that they’ve been able to do something similar in hitpaw, but once again I haven’t had time to explore using it.

Some of it is truly all about spending time with it, and just trial and error… probably more error…Unless one is a working professional artist who already is familiar with all of the precise lingo/terms and so on… it’s like using chatgpt to write hit songs (which I’ve watched a pro do) you need to sit down with it and correct it’s mistakes when it gets inversions wrong, doesn’t recognize the proper stanza, and so on… keep chatting to it, till it refines the idea to what we are looking for, and then maybe ya have a Michael Jackson hit?

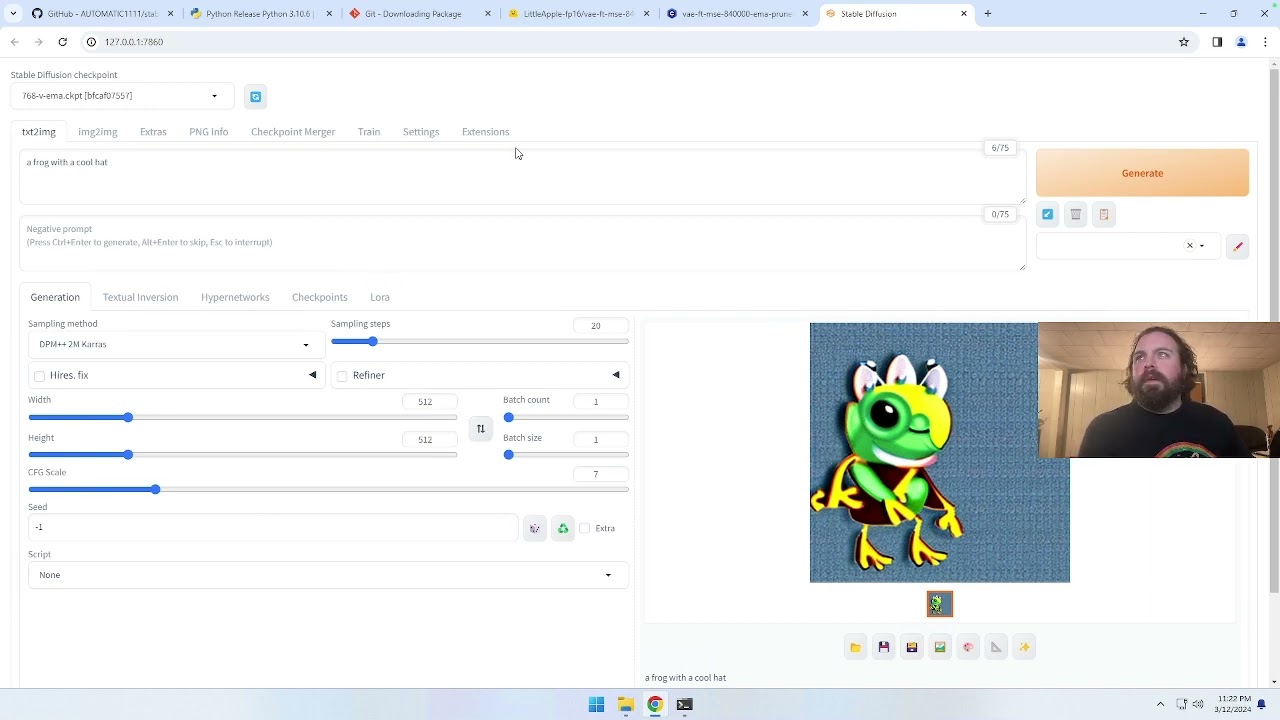

Here are a few suggestions I’ve used with any of these AI generator thingamaBOBs:

(Type of art) (fractal, oil, baroque, impressionism, cubism art) of [subject], (Style) (folk, tattoo, graffiti, etc), [theme/material/element], (tone, ie. glowing, vivid, intricate), (color references for any object or primary source), (resolution ie. 8K), HD, digital art — v 4 — q 2

another example:

Design a techno-organic extraterrestrial in a Futuristic Sci Fi professional masterpiece portrait, fractal art, similar to art by Danny Flynn, Julien Allegret, Charlie Vicetto, and Paul Griffitts. (add a few other variables, or details like colors, shapes for background patterns, etc.)

Using exclude can also help with prompts like:

bad proportions, beyond borders, cropped, branding, craft, duplicate, grains, grainy, gross proportions, improper scale, low quality, low resolution, mishappen, mistake, outside the picture, unfocused, watermark

Hoping this rant helps, maybe inspires others to chime in (so we can all get the best out of the bots!!!), or maybe has the haters come out and say “This idiot don’t know what he is talking about, this is how you do it: (insert arseholes solution)”

best of