OK. I have a free moment, so here’s a crash course on what’s going on behind the scenes with the system.

The basic concept, as an analogy, is that if you just hold two types of objects in your hands and you want to know something about them in regards to how they differ from each other in their physical nature, one of the easiest ways to know discern that is to throw each object against the same wall.

That wall is the equalizer to each of the objects. If one of the objects bounces back 3 meters and the other bounces back 5 meters, and you threw them at the same controlled force, then you know something about how they differ right away. One of them is more dense than the other, and/or one of them is made of more reflexive material.

Now, further, if you wanted to know if it was a matter of density versus the material’s reflexive nature, you could then take each object and place them in water. The one that displaces more volume by proportion is more dense than the other. If they differ here, then you can say that one is more dense than the other and that this is likely how they differ in bouncing off of the wall. If they don’t differ here, then you can presume that one is made of more reflexive material, and that accounts for their difference in bouncing behavior.

In like fashion, that -23 in the Advanced User Settings is the wall that songs are thrown against first, and the 18 for the DNR is like the water that each is placed into next.

We’ll get to the bandwidth in a moment; for now, we’ll just focus on these two.

So the way the calculations start is by comparing how much of a difference the sample song’s LKFS is from an LKFS of -23, and jots that difference down.

So, if the song’s LKFS is -14, then it would have a difference of 9 from -23.

Next, we flip to tossing it into the water. So the song’s DNR profile is compared against the DNR of 18 and again, the difference is noted and kept in memory. So a song with a DNR of 12 would have a difference from 18 of 6.

Now, we then combine them through Mathimagical Voodoo (which…I won’t go into here) so that we don’t end up with ridiculously high values. Meaning, we don’t simply add them up and say that the value is 15. That’s WAY too heavy-handed of an adjustment, and would produce terrible results.

Instead, again, some voodoo goes on and they are each chopped down by the same factor (4 is that factor actually) and we get 2.25 + 1.5 = 3.75.

Now we take the original LKFS of -14 and LOWER it by the amount we just arrived at, which gives us -17.75.

Woooh-oh! We’re half way theeEere! (sorry, couldn’t resist).

The LKFS and DNR are effectively done. Now for the bandwidth.

The bandwidth is like looking at the size of the object instead of its response/behavior and density.

Sometimes, the shear size of an object might be the reason that something behaves differently than another, and not what it’s made of or its density.

So, to get that picture, we look at the Audacity data, the bandwidth. This is where things get really hairy in the math, so I’ll do my best to keep it simple and detailed at the same time (scratches head).

The way that you do size of something is by checking its measurements of height, width, and then you can apply a scale of its surface area via checking the radius and employing Pi (well…for round objects anyway).

Likewise we’re going to check the total bandwidth range, then we’re going to check the dB range of those frequencies. This gives us our height and width bit. Width is the bandwidth range, and height is the dB range.

Now we need to “check against the radius and employ Pi”…ish.

To do that, we need to create a simple ratio, like rise over run, or a TV’s aspect ratio. We just want one simple number, and that is What is the ratio of Bandwidth Range to dB Range? So we divide the first by the second.

Bam. Now we have a huge number. Eegads!

No problem, we’ll get back to that in a moment.

Firstly, there’s a bit of a pit-stop that doesn’t fit the analogy here because we also toss the DNR back into the fixing. Why? Well, because the spread of the LKFS range (which is the DNR) is important to the weight of the frequency range. If we just took only the frequency information and ignored how tightly packed they are by dynamic LKFS range, then we would run into an imbalance.

So we take that very large Eegads value and multiply it by the DNR value. GAAAH! Now that giant number is even bigger!

No worries. We’re going to go on to the last part of the analogy and get the surface area in terms of “per square inch” style - that is, we’re going to tell how big by how long it is per the density involved (dnr).

We actually have all of this already, but the number is just vastly oversized, so we need to scale it back down.

To scale it back down, we divide by 1,000*Pi.

Pi to keep it in proportion, and 1,000 because that’s the caliber in decimal places we jumped up into from our starting point.

So, for the bandwidth of the song that’s loaded in the document by default, the result is 3.9, from a starting position of 21963 as the Bandwidth range, and a dB range of 22.4 (btw, the dB range is what’s called the “AFB” in the calculations and math parts).

Woohoo!

So, we take that -17.75 that we have from the LKFS and DNR correction (simply called LKFS correction) and lower it even further by this as well. This gives us -21.65.

So, relative to -23 and 18, this song spits out as -21.5ish.

A different song would spit up differently.

NOW I take an bump it up by the amount I want until I see a value for the LKFS in the ADJUSTED OUTPUT that I want. So I click on the dB INCREASE SETTING and bump it up. Let’s say I want -14 because I liked where this song was at (we’ll get back to why I would just return it to where it started in a moment).

So I set the INCREASE SETTING to 7. Bam. I’m pretty much back to where I started (we’ll ignore any issues with PEAKS that might happen for the moment, because if you saw issues with PEAKS highlighting in red, then you can choose to walk it down…maybe to 6…and see if that removes the issue and settle for 6 as the solution).

Now back to why I return it back to what it started at.

ONLY the first song that I do returns to normal IF I WAS HAPPY with the setting (if not, then I would adjust it if, for example, I thought it was too loud).

Now that I’ve set my FIRST SONG to 7, I then go to my second song, load it up and I MUST SET IT TO 7.

If I don’t, then it won’t work right for a good balance when the two are played together.

Let’s say the second song starts out at -7 LKFS and a DNR of 7, and a bandwidth of 21920, and I set the dB INCREASE SETTING to 7 so that it’s paired with the first song, then I get a return of -13 LKFS and to accomplish this, I reduce it by -6 dB in my DAW and re-render it out.

The first song, since I was happy with it, doesn’t change. I changed it by +/-0 dB so I didn’t need to re-render it from the DAW.

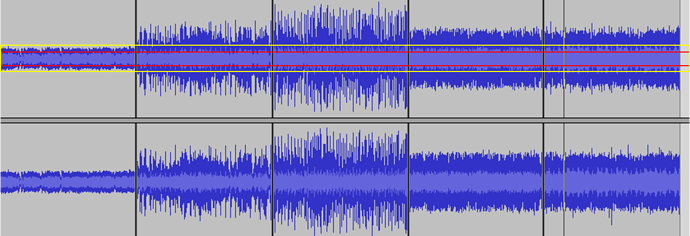

So now, if I play them next to each other, they sound like this: https://www.dropbox.com/s/9264nvcgfkw5ih6/loudness%20calculator%20example.mp3?dl=0

Now, the thing that remains untested, and which I need feed back on, is whether it’s working for lots of variations on this. It might be that the LKFS considerations need to be more heavily impacted than they currently are (that’s a possibility). So basically, I have faith in the concepts of the calculations and the approach, but the weighting might need to be adjusted to properly work in a more broad use than just my own songs.

Cheers,

Jayson

):

):