I was able to pull all the data this morning and finish calculations for the first song in my sample. I should be able to run the rest tonight with any luck. What I’m looking for, rather than trying to balance different kinds of songs and validate it with what my earballs tell me, is to see all the songs come up with about the same numbers since they’re all off the same album, even though they present different dynamics. The frequencies are going to be pretty similar across the tracks, but that part is still being tested somewhat. FWIW, all the samples so far have been so jarring that even if they came out to have the same exact loudness at time of transition, the sudden change in bandwidth is fooling my ears into thinking the newer stuff is still louder. I think you should crossfade between songs a bit and hopefully that will help the songs sound closer in volume than they do now.

Mixing/Mastering Loudness Equalizing Tool (anyone interested?) - calling all engineers!

Oh, that’s an interesting test! I wouldn’t have thought of that; good idea. As long as the songs are of relatively equal personality (one not being a nice soft thing, and another being a blow your doors off style…which I’m sure you have well under mind) then yeah…it should have relatively along the same lines of values returning.

I think another good band for that kind of test would be the early Greenday albums because everything is pretty much just a wall of high gain distortion guitars on moderate speed until the end of the album, and they’re songs at the time were very basic with little variation in the playing. It was usually just a handful of chords, slam the distortion pedal, never stop down strumming until the end.

Good idea on the crossfade. I’ll look into that.

Cheers,

Jayson

I’m pretty sure I have a good rip of Nimrod, it’s one of my top 5 albums (believe it or not). I can give that a shot a bit later, but I want to get Breakfast in America done first.

This morning I continued on with the diverse songs testing and refined it a bit further.

So I’m on the fence on this - sometimes when I listen I think one way; other times I hear it another.

That’s how close I think I’ve narrowed things down at this point (at least in regards to dialing in the functionality improvement - the calculations are an absolute F***ing mess atm so a LOT of cleanup will be needed if this holds). Basically, every small adjustment I make has the possibility of sending the whole train off the rails in either direction - it’s like finely zeroing in a sniper rifle for a 1.5 mile shot.

As such…everyone (@White_Noise @Ag_U @Multicellular @chasedobson @relic @Brogner): OPINION TIME: which is better here, A or B?

Same songs in the same order as before.

What’s the difference?

A weighted factor on the DNR of 0.5.

This is effectively only noticeable (if at all…some folks may not even hear it) on Greenday, and that was the fine-tuning here. After accounting for big band (funk, swing, etc…) and older recordings, some of the personalities of the adjustments negatively impacted “compressed wall of distorted guitars” songs too much. This adjustment (both of them) pushes that style (like Greenday) back up where it should be.

[content deleted for possibly poor quality: See this post below to review the songs instead]

Thoughts?

Cheers,

Jayson

The drums in jungle boogie sound a little less buried/ more dynamic (?) in A compared to B (lol from what I could tell)

actually I like A a lot better

I really don’t have a clue what the fuck is going on but in order

The original

A

B

The sub seems more noticable in B, but not in a good way.

Thanks for that bit about the quality @Brogner. That could have been because I wasn’t being careful with quality control while I was doing that - just focused on the balance between…SO!

I want to make double-sure that the issue may not have been caused from poor quality rendering, considering what you were talking about.

So…I’ve re-rendered (properly this time so that everything’s playing the exact same sections), and I’d like everyone to take a listen to this instead (I edited the previous post to just point to here).

Instead of the original, I’m giving an approximation of the standard method for streaming currently, Treatment A, and Treatment B.

Current Streaming Standard (as an average):

Treatment A (DNR weight 4.5):

Treatment B (DNR weight 5):

Cheers,

Jayson

I don’t know whether to shit or adjust my wrist watch, lol this is out of my realm. Sounds good? loving this thread

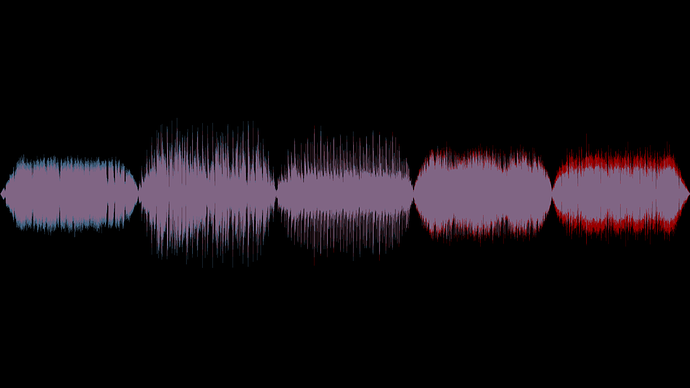

I generated waveforms of both, made them transparent a bit and layered them to see the difference visually.

Red is the standard stream method.

Blue is the DNR weight 5 (treatment b).

Purple is where they are the same.

I’m personally pretty pleased with how it treats the older music and newer electronic music.

Cheers,

Jayson

I liked B because it seemed to me like it was doing the least. I’m especially paying attention to jungle boogie and sedated here because of their dynamics, and in A they definitely sound like they’re being pushed a bit and end up kind of crowded to my ear.

I tend to agree there.

I’ve even lightened it a tad more on the weight to open it up a bit more for those kinds of tracks.

I have more freedom to do that than I originally did because I’m able to leverage the frequency ranges for their volumes in specific ranges and adjust more nuanced. So I don’t need the DNR as much to fix skrillex, for example, because it sees his massive -8 dB low end, meanwhile Ellington rang in somewhere in the -50’s iirc.

Before, I had to rely on DNR to assume everything about how the space was used in a compressed song.

My math is a mess atm, but I’m now sampling and weighting 5 different aspects of a song whereas before it was 3 (compared to the standard which samples 1 aspect: average loudness…which is a log10 k-weighted “long-term” sampling of volume only).

I think my next test will be ambient, classical, heavy metal, and hip hop…which should be one hell of a stress test (and probably turn out to be a pain in the ass).

Cheers,

Jayson

Today’s notes.

Started the second test. Initial impressions. Very messy. Needs more tweaking.

A) Classical, ambient, and solo piano music threw a curve ball at me (but it’s one I think I can manage).

B) On the other hand…of all things…Tom Petty!! has proved to be one of the hardest challenges. I’ve thrown my hands up in frustration countless times trying to figure out how to discern that profile from over-compressed and loud profiles (Tom Petty’s music can look very, very flat, but it’s not from massive compression - so the DNR can be pretty low, but because the LKFS isn’t massive, it’s not bad - it’s just that they’re filling up the space with a lot of - usually - sustained strikes on everything). CURSE YOU TOM PETTY FOR NOT PLAYING LIKE EVERYONE ELSE!! lol

Cheers,

Jayson

This run (everything in this thread) has gotten very close, but I think that holy grail I’m after will require a rebuild of the entire core model; this time starting with the frequency band in groups and using that as a way of determining how to approach the LKFS and DNR, rather than slapping the frequency factoring on separate from LKFS and DNR as a simple additive.

This is going to be an overhaul, but I hope to get higher fidelity of reflection out of it.

Cheers,

Jayson

p.s. @White_Noise still interested in yours.

I hear ya.

I’ve only gotten so much done because my job happens to look like I’m working as long as I have monitors filled with spreadsheets and data everywhere, lol.

And this rebuild is … ugh…going to take some motivation; gotta catch my second wind. ATM, just thinking about it is a bit big. I’ll get there though. Come on enthusiasm!

Cheers,

Jayson

After running all the songs through the calculator, the results are respectable. The three middle songs all came out around -13.5 db, with varying dynamics between them, though similar bandwidth. There was one higher around -11.7 and one lower -14.5. I didn’t do any favors for Audacity’s frequency plots, just ran them straight, and some of these have long lead in times. I’ll take another look at the outliers and see if they get closer with a better frequency analysis.

Nice!

I forget, were you using version 2.2?

In other notes…I got the extreme basics started on Beta 3.0.

3.0 will draw the audacity data under a microscope and to do that, I needed to break the data down by range bins.

That part has been done.

(This is Skrilliex’s song, Bangerang.)

Next is the more challenging part. Converting dBFS; audaicity’s way of delivering information…which, luckily, LKFS and LUFS are 1:1 in range as dBFS, but the tricky part will be working out how much of any one bin is too much or too little. The standard Fletcher Munson curves are effectively useless here due to their scale relying on dB SPL which is a physical space metric of air pressure and doesn’t relate to LKFS/LUFS, or dBFS directly (in a given studio or environment, you can calibrate it such that, say, -23 dBFS equals 83 dB SPL, but that means pretty much nothing outside of that environment - especially when you’re receiving the material blind - as we do as listeners, and not as the producer), so I’ll have to tinker a bit to figure this one out.

I have a few ideas based around using sine waves to generate tests in specific ranges and see how that goes, but it’ll probably be a lot of pulling my hair out for a while until I figure out a way to do the impossible (according to industry convention).

Cheers,

Jayson

Could you normalize the song and then look at the voltage that would be put out in a given band? Line level is always 2 volts, so you could look at how close any one band is getting to that absolute limit, which does translate to loudness before the amps (which is where I assume any per-environment calibration would take place).

Maybe, but it’s not just an issue of a hard reference point, because -23 dBFS does well for that on average as well, but instead the difference of each frequency’s different impact on our perception of loudness.

Bass heavy or brass heavy can alike seem loud when by meter everything’s even.

Other times it can be about the attack of a sound. If you have a piano at -14 lkfs, then it could sound even louder if it is played with a sharp attack and longer releases (soft piano with marked note strikes) because the frequency and the peaks.

It’s a pile of weird little dynamics that add up.

Interestingly, if you take the average dBFS of each of the frequencies, and then average those averages, you often get relatively close to the average LKFS you would get from a loudness tool (approximately, but not good enough to use in place of).

My current building is looking at frequency levels, frequency bandwidth, max/avg peak level, avg lkfs, and avg dnr.

How exactly it will all fit together? Not sure yet. I have to pile it up and see what happens first.

I like the idea of volt levels, but I can’t think of a way to use that in a manner of greater advantage than dbFS/lkfs (probably because I’m very familiar with these two from my last job).

Cheers,

Jayson

This is due to the fact, although the equations concerned are intended to be used by evaluating more than one songs against every different, the limitations of the scope of the excel workbook limits the capability right down to one song PER workbook (as a minimum to this point…I may additionally eventually extend this, but it seems more secure and less complicated to simply open two workbooks; one for each music, or greater…). https://www.gearsireview.com/